Remote IoT Batch Job Example: Processing Data From Yesterday On Remote Systems

Have you ever thought about how much information our connected devices gather every single day? It's truly a lot, and sometimes, you need to look back at what happened, say, just yesterday, to make sense of things. This is where a remote IoT batch job comes into play. It's a way to handle big chunks of data from faraway sensors and gadgets, all without needing to be right there with the devices themselves. You see, getting insights from data collected even just a day ago can be very helpful for making good decisions later on, and that's what we're going to talk about here.

Picture this: you have hundreds, maybe thousands, of tiny sensors spread out in different places. These sensors are always busy, collecting all sorts of readings, like temperature, humidity, or how much power something is using. Now, you don't always need to know these things the very second they happen. Sometimes, it's much more useful to gather up all the data from a certain period, like everything from yesterday, and then process it all at once. This approach, where you deal with data in groups rather than one by one as it comes in, is what people often call a "batch job," and doing it from a distance makes it "remote."

So, we're going to explore what a remote IoT batch job looks like, especially when you're interested in data from a previous day. We'll look at why this kind of setup is so useful, what pieces you generally need to make it work, and then we'll walk through a simple example. It's a pretty practical way to handle lots of device data, and it can save you a lot of effort, actually. This sort of system helps you get a good handle on things, even when the devices are far away, so it's almost like magic.

Table of Contents

- What is a Remote IoT Batch Job?

- Key Components of a Remote IoT Batch System

- A Practical Remote IoT Batch Job Example

- Benefits of This Approach

- Common Challenges and How to Handle Them

- Frequently Asked Questions

- Conclusion

What is a Remote IoT Batch Job?

A remote IoT batch job is, in a simple way, a set of automated tasks that handle information from connected devices that are far away. These tasks run at specific times, often once a day, to process a collection of data that has built up over a period. Think of it like gathering up all the mail from your mailbox at the end of the day instead of checking for each letter as it arrives. It's a very common way to work with large amounts of data, especially when you don't need instant updates.

The "IoT" part means we're dealing with the Internet of Things. These are everyday objects, or specialized sensors, that can connect to the internet and send or receive data. We're talking about things like smart thermostats, industrial sensors, or even tiny weather stations. They're out there doing their thing, quietly gathering readings, and that, is what we want to process.

The "batch job" aspect means that instead of processing each piece of data as it comes in, which is sometimes called real-time processing, you wait until you have a whole group, or "batch," of data. Then, you process it all at once. This is often more efficient for big datasets, as it can use computing resources more effectively. It's a bit like doing your laundry; you wait until you have a full load rather than washing one sock at a time, you know?

- Camilla Araujo Onlyfans Videos

- Cole Sprouse

- Cece Rose Nudes

- Kalogeras Sisters House Location Google Maps

- Theresa Caputo Partner

Why Process Data from Yesterday?

You might wonder why focusing on data from "yesterday" is so important. Well, there are many good reasons. For one thing, looking at past data helps you spot trends. If you're tracking temperature in a remote location, knowing yesterday's average helps you understand if today is unusually hot or cold. This kind of historical view is very useful for reports, analysis, and planning, too. It provides a stable snapshot.

Another reason is efficiency. Gathering all of yesterday's data at once can be less demanding on your network and computing systems than trying to process every single reading as it happens. This can save on costs and make your systems more stable. For example, if you have thousands of sensors, trying to process every single reading in real-time could overwhelm your central system, so this is a very practical approach.

Furthermore, many business decisions and reports are based on daily, weekly, or monthly summaries. Processing data from yesterday fits perfectly into these cycles. It allows you to create daily reports that are complete and accurate, reflecting all the activity from the previous day. This is particularly helpful for things like energy consumption reports, daily production summaries, or environmental monitoring, actually.

The "Remote" Aspect

The "remote" part of "remote IoT batch job" is pretty key. It means that the devices collecting the data are not in the same place as the systems that process the data. These devices could be in a faraway factory, on a farm, in a different city, or even in space. This distance brings its own set of considerations, like how to securely get the data from the device to your processing center.

Being remote means you need reliable ways for devices to send their data. This often involves internet connections, cellular networks, or even satellite links. The batch job then runs on a central server or in a cloud environment, far from where the data was originally gathered. This separation allows you to manage and analyze data from many distributed locations from one central spot, which is very convenient, you know?

This setup also means you don't need a lot of computing power right at the device itself. The devices can be simple and low-cost, just focusing on collecting data. The heavy lifting of processing and analysis happens elsewhere, where you have more resources. This makes it possible to deploy IoT solutions in places where it would be difficult or too expensive to have complex computing infrastructure, which is a big plus.

Key Components of a Remote IoT Batch System

To make a remote IoT batch job work, you need several pieces that fit together. Each part plays a specific role in getting the data from the devices, storing it, processing it, and then showing you the results. It's a bit like an assembly line, where each station does its part, so, too it's almost a well-oiled machine.

IoT Devices and Sensors

At the very beginning of this whole process are the IoT devices and their sensors. These are the "eyes and ears" out in the field. They could be anything from a simple temperature sensor in a warehouse to a complex machine monitoring its own performance. Their main job is to collect specific types of data, consistently and reliably. They are the source of all the information you'll be working with, that is that.

These devices are often designed to be energy-efficient, especially if they run on batteries. They might collect data at regular intervals, like every few minutes, or only when something important happens. They usually have a way to connect to a network so they can send their collected readings back to a central system. Think of them as tiny data reporters, always on the job, gathering bits of information for you.

Data Ingestion Layer

Once the IoT devices collect their data, they need a way to send it to a central place. This is where the data ingestion layer comes in. It's the gateway that receives all the data streams from your many devices. This layer needs to be able to handle a lot of incoming data at once and make sure it's received without errors. It's like a busy postal service that sorts and accepts all the mail coming in from different places, you know?

Common tools for this layer include message brokers or cloud services designed for IoT data. These services can queue up messages, manage device connections, and often provide security features to make sure only authorized devices send data. They act as a buffer, making sure that even if a lot of data arrives suddenly, nothing gets lost. This step is pretty important for keeping everything organized, apparently.

Data Storage

After the data is received, it needs a place to live. This is the data storage component. For batch processing, you usually store the raw, unprocessed data for a certain period, like a day or a week. This allows your batch job to access all the data it needs for a specific time window. Databases, data lakes, or cloud storage services are typically used here.

The choice of storage depends on the type of data and how you plan to use it. For structured sensor readings, a time-series database might be a good fit. For less structured data, a data lake could be better. The key is that the storage solution needs to be able to hold a lot of data and allow for efficient retrieval when the batch job runs. It's like having a very large, organized library for all your collected information, really.

Processing Engine

The processing engine is where the actual work happens. This is the part of the system that takes the raw data from storage, applies various calculations, filters, and transformations to it, and turns it into something meaningful. For a "since yesterday" batch job, this engine would pull all the data from the previous day and then perform the necessary analysis. This could involve calculating averages, totals, identifying anomalies, or creating summaries.

Tools for processing can range from simple scripts written in languages like Python to more powerful big data frameworks. The engine needs to be able to handle the volume of data efficiently and perform the required computations. It's the brain of the operation, turning raw numbers into valuable insights. This step is where the magic of data analysis truly begins, you know, sort of.

Scheduling and Orchestration

Since we're talking about batch jobs, they need to run at specific times. This is handled by the scheduling and orchestration component. This part makes sure that the batch job starts exactly when it's supposed to, for example, every morning at 1 AM, to process all of yesterday's data. It also manages the order of operations, making sure data is collected before it's processed, and so on. It's like a conductor for an orchestra, making sure every instrument plays at the right time.

Common tools for scheduling include cron jobs on Linux servers or dedicated workflow orchestration platforms. These tools can also handle retries if a job fails, send alerts, and manage dependencies between different tasks. This component is pretty important for automating the entire process and making sure it runs smoothly without human intervention every day, which is a big relief, basically.

Reporting and Visualization

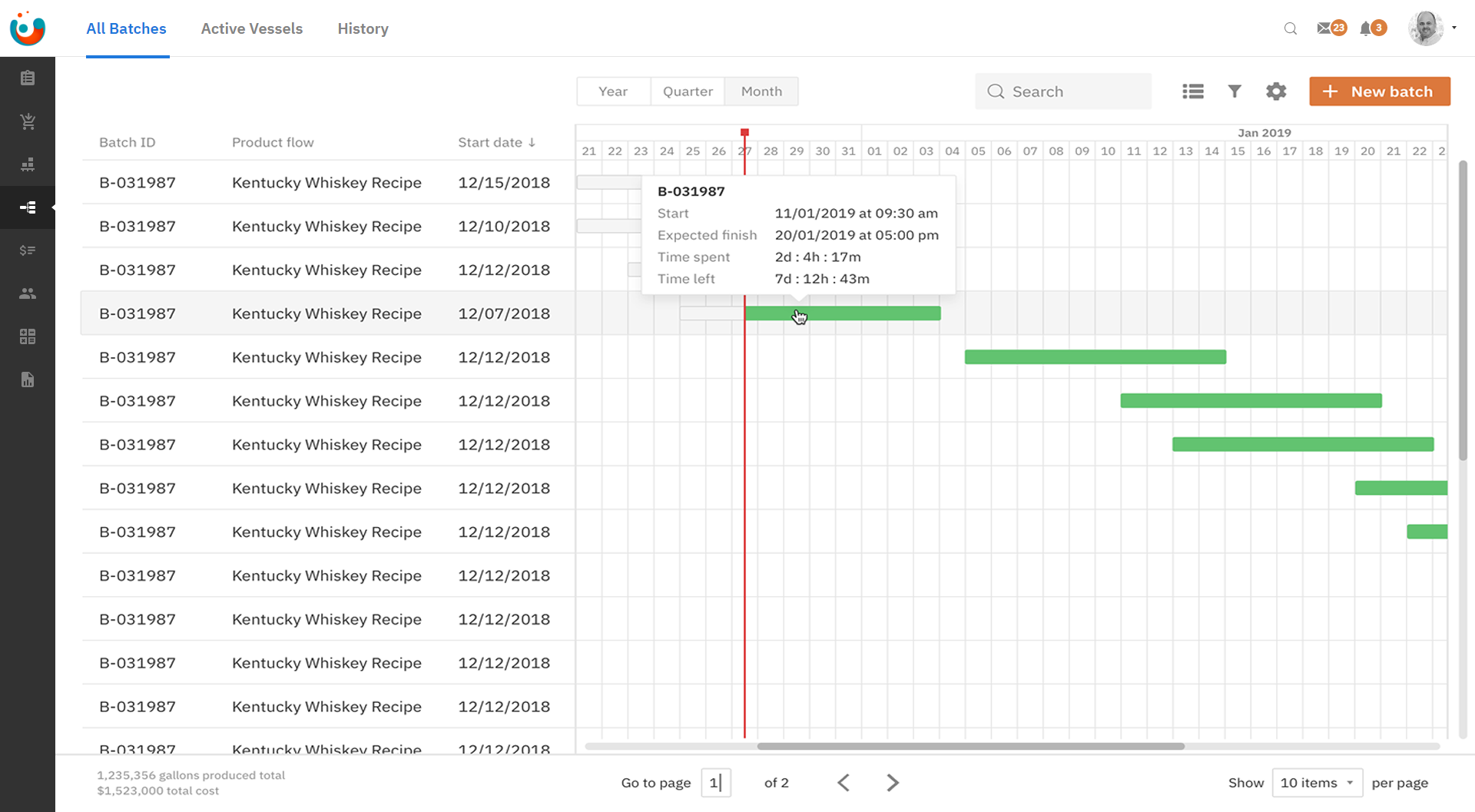

Finally, once the data is processed, you need a way to see and understand the results. This is where reporting and visualization tools come in. They take the processed data and turn it into charts, graphs, dashboards, or simple reports that are easy for people to read and understand. This makes the insights from your data accessible to decision-makers.

These tools can help you spot trends, identify problems, and track performance over time. For example, you might have a dashboard that shows the average temperature in your remote warehouses for each day of the past month, or perhaps the total energy consumption from yesterday. This final step is what makes all the previous effort worthwhile, providing clear answers to your questions, you know?

A Practical Remote IoT Batch Job Example

Let's walk through a simple, practical example to see how all these pieces fit together for a remote IoT batch job that focuses on data from yesterday. We'll use a scenario that many people can relate to, something with real-world applications. This will help make the whole idea a bit clearer, like your own little project.

Scenario: Smart Farm Monitoring

Imagine a large agricultural farm with fields spread out over many acres. To optimize crop growth and save water, the farm uses IoT sensors placed in different parts of the fields. These sensors measure soil moisture, temperature, and humidity every 15 minutes. The farm manager wants a daily report, delivered every morning, summarizing the average conditions in each field from the previous day. This helps them decide where to irrigate and what crops might need extra attention, so it's a pretty vital report.

The sensors are remote, meaning they are far from the farm office where the reports are generated. They communicate wirelessly to a central gateway on the farm, which then sends the data to a cloud service. The farm manager doesn't need real-time alerts about every tiny change in soil moisture, but a summary of yesterday's conditions is very useful for planning the day's activities. This is a classic case for a remote IoT batch job, you see.

How the Batch Job Works

The batch job for our smart farm scenario runs every morning, say at 3:00 AM. Its main goal is to collect all the soil moisture, temperature, and humidity readings that came in during the previous calendar day (from 12:00 AM to 11:59 PM yesterday). Once it has all that data, it calculates the average moisture, temperature, and humidity for each sensor location. Then, it might combine these averages to give a field-wide summary.

The processed summary data is then stored in a separate, more organized database, ready for reporting. Finally, a report is generated, perhaps as a simple email or a dashboard update, which the farm manager can check when they start their day. This entire process is automated, so no one needs to manually pull data or run calculations. It just happens, which is quite convenient, honestly.

Step-by-Step Walkthrough

Let's break down the process into individual steps, showing how the data flows and what happens at each stage. This will give you a clearer picture of the remote IoT batch job in action. It's a bit like following a recipe, you know?

Data Collection

The IoT sensors in the farm fields constantly collect soil moisture, temperature, and humidity readings. They do this every 15 minutes, twenty-four hours a day. Each reading is time-stamped, so we know exactly when it was taken. These sensors are low-power devices, designed to run for long periods on batteries, which is pretty important for remote locations.

Data Transmission

The sensors send their readings wirelessly to a local gateway located on the farm. This gateway then bundles up the data and sends it over the internet to a cloud-based data ingestion service. This service is designed to receive large volumes of data from many devices securely and reliably. It's like a central post office for all the sensor messages. This happens continuously throughout the day, actually.

Batch Processing Logic

Every morning at 3:00 AM, a scheduled batch job kicks off in the cloud. This job connects to the data storage where all the raw sensor readings are kept. It then queries the database to pull out all the data points that were recorded between 12:00 AM and 11:59 PM of the previous day. This is the "since yesterday" part of our example, you see.

Once it has yesterday's data, the job performs its calculations. For each sensor, it computes the average soil moisture, average temperature, and average humidity for that 24-hour period. It might also identify the minimum and maximum values if those are useful. This processing turns a lot of individual readings into a few meaningful summary numbers, so, it's almost like condensing a long story.

Data Storage and Access

The calculated averages and summaries are then saved into a separate, more organized database table. This table might have entries like "Field 1, Sensor A, Date: 2024-05-20, Avg Moisture: 65%, Avg Temp: 22C, Avg Humidity: 70%." This processed data is much easier to query and use for reporting than the raw, individual readings. It's like having a neatly summarized report ready for you, basically.

Reporting

After the processed data is stored, another part of the batch job (or a separate, linked process) generates the daily report. This report might be a simple email sent to the farm manager's inbox, containing a table of yesterday's average conditions for each field. Alternatively, the processed data could update a dashboard that the farm manager checks on their computer or tablet. This provides a quick and clear overview of the farm's conditions from the previous day, which is very helpful for daily operations, you know?

Benefits of This Approach

Using a remote IoT batch job for data from yesterday offers several good advantages. For one thing, it's very efficient. Processing data in batches means you can use computing resources during off-peak hours, when they might be cheaper or less busy. This can save you money and make your systems run smoother. It's like doing your heavy work when no one else is around, actually.

Another benefit is reliability. By processing data that has already been collected and stored, you reduce the chances of losing information due to temporary network issues or device glitches. If a sensor goes offline for a short time, its data might still be available when the batch job runs later. This approach makes your data processing more forgiving, which is a good thing.

It also simplifies your system design. You don't need complex real-time streaming setups if your use case only requires daily summaries. This can make the system easier to build, maintain, and troubleshoot. It's a more straightforward path to getting useful insights from your remote devices, too. Plus, it gives you a consistent, historical view, which is often what you need for long-term planning and analysis.

Common Challenges and How to Handle Them

Even with all its benefits, setting up a remote IoT batch job can have a few hurdles. One common challenge is dealing with inconsistent data. Sometimes, a remote sensor might send bad readings, or it might miss sending data for a while. To handle this, your processing logic needs to be able to spot and either fix or ignore these bad data points. You might use data cleaning steps before processing, so, it's almost like tidying up before a big project.

Another point to consider is data volume.

- Jameliz Benitez Smith

- Love Island Olivia

- Tommy Pope Net Worth

- Amateur Allure Raven Lane

- Is Karol G Pregnant

What Is RemoteIoT Batch Job Example Remote Remote And Why Should You Care?

Remoteiot Batch Job Example Remote Aws Developing A Monitoring

Remoteiot Batch Job Example Remote Aws Developing A Monitoring