Remote AWS IoT Batch Jobs: Handling Data From Afar, Explained

Think about all the devices out there that collect information, like sensors in a factory or smart gadgets in homes. These devices often send small bits of data, one at a time, to a central place. But what happens when you have a whole lot of this information, maybe from thousands of devices, and you need to work with it all at once? That's where something called a remote IoT batch job comes in handy, especially when you use a cloud service like Amazon Web Services, or AWS. It helps you deal with big groups of data in a smart way, even if the devices are far away, and that, is what we will explore here.

You see, managing information from many devices spread across different places can get tricky. When you want to do something with all that gathered information, like making reports or finding patterns, doing it piece by piece takes a lot of time. A batch job means you collect a large amount of information and process it together, all at once. This approach makes things much more efficient, and it saves resources, too. It's like gathering all your laundry and washing it together instead of one sock at a time, you know?

This article will show you how remote AWS IoT batch jobs work. We will look at why they are useful and give you an idea of how you might set one up. It's about getting the most out of your connected devices, no matter where they are. We'll talk about the different pieces of AWS that help make this happen, giving you a good picture of the whole process. So, let's get into it.

Table of Contents

- What Are Remote IoT Batch Jobs?

- AWS and IoT: A Natural Fit

- Designing Your Remote IoT Batch Job Example

- A Simple Scenario for a Remote IoT Batch Job

- Making Your Batch Jobs Better

- Frequently Asked Questions About Remote IoT Batch Jobs

- Looking Ahead with Remote IoT Batch Jobs

What Are Remote IoT Batch Jobs?

A remote IoT batch job, basically, is a way to process a lot of information from devices that are far away, all at the same time. Imagine you have smart meters in many different homes, or maybe sensors on farm equipment spread out over a wide area. These devices send their readings over time. Instead of looking at each reading as it comes in, you might want to gather all the readings from a certain hour or day and then work with that whole collection together. This is what we call a "batch."

Why Use Batch Processing for IoT Data?

Processing information in batches has some real advantages. For one thing, it can be much more efficient. If you are doing something like calculating averages, finding trends, or checking for certain conditions across many data points, doing it all at once saves a lot of back-and-forth communication. It also helps manage the computing resources you use. Instead of having computers ready to work all the time for tiny bits of data, you can have them spring into action only when a big pile of data is ready to be handled. This can, in some respects, save money.

Another good reason is that some types of analysis just work better with a full set of data. If you are trying to spot a pattern that only shows up when you look at a lot of readings together, a batch approach gives you that complete picture. It's kind of like trying to see a forest from individual trees; you need to step back to see the whole thing, you know?

The Remote Aspect

The "remote" part of remote IoT batch jobs means the devices sending information are not right next to the computers doing the work. They could be in another city, another country, or even on a different continent. This distance means you need good, reliable ways to get the information from the devices to where it needs to be processed. Cloud services, like AWS, are really good at this because they offer a global network that can handle information coming from almost anywhere, and that is a very helpful thing.

When devices are remote, you also need to think about how they connect and how secure those connections are. You want to make sure the information gets where it needs to go without being messed with or seen by the wrong people. AWS has many tools to help keep these connections safe and sound. So, you can feel good about the data making its way to your batch job.

AWS and IoT: A Natural Fit

AWS, which stands for Amazon Web Services, is a collection of computing services offered over the internet. It's like having access to a huge set of tools and machines that you can use without having to buy and maintain them yourself. For anything related to the Internet of Things, or IoT, AWS has many services that work together very well. This makes it a great choice for setting up remote IoT batch jobs, as a matter of fact.

Key AWS Services for Remote IoT Batch Jobs

There are several AWS services that play a big part in making remote IoT batch jobs possible. You have services for connecting devices, for storing information, for running code, and for managing how all these pieces work together. Knowing a little about each of these helps you understand the whole picture. For instance, you might use one service to get the data, another to hold it, and yet another to do the actual calculations.

- AWS IoT Core: This is where your devices connect. It's like the main hub that receives all the messages from your remote sensors and gadgets. It helps manage millions of devices and keeps their communication safe.

- Amazon S3 (Simple Storage Service): Think of S3 as a giant, very reliable storage locker for all kinds of digital things. When your devices send information, you can store it here until it's time for the batch job to start. It's very good for holding large amounts of data, and it's quite flexible.

- AWS Lambda: This service lets you run small pieces of code without having to worry about servers. You just give it your code, and it runs it when needed. This is perfect for processing data in batches because you only pay for the time your code is actually running.

- AWS Step Functions: This service helps you arrange and manage a series of steps in a process. For a batch job, you might have steps like "collect data," "process data," and "save results." Step Functions helps make sure these steps happen in the right order and handles any issues along the way.

- Amazon EventBridge: This is a way to connect different parts of your AWS setup using events. An event could be something like "a new file of data just arrived." EventBridge can then tell another service, like Lambda, to start working on that data.

Data Collection with AWS IoT Core

Getting information from your remote devices is the first big step. AWS IoT Core acts as the main entry point for all your device data. It can handle messages from millions of devices at once, making sure each piece of information arrives safely. Devices can send their data using a common method called MQTT, which is very light and works well even with small, simple devices. It's like a post office for your IoT messages, basically.

Once AWS IoT Core gets the information, it can send it to other AWS services using something called "rules." These rules are like instructions that say, "If a message looks like this, send it over to that storage place." This is how you start to build the path for your data from the device all the way to your batch processing system. So, it's quite a clever system.

Storing Your IoT Data

After your data comes into AWS IoT Core, you need a place to put it until it's ready for processing. Amazon S3 is often the best choice for this. It's a very cost-effective way to store huge amounts of information. You can set up your IoT Core rules to send all incoming data directly to an S3 bucket. A bucket is just a term for a storage area in S3, you know?

Storing data in S3 is also good because it's easy to get that data later when your batch job starts. Your processing code can simply read the files from S3. You can even organize your data in S3 by date and time, which helps when you want to process data from specific periods. This makes finding the right data for your batch job much simpler, and that is very convenient.

Designing Your Remote IoT Batch Job Example

Now, let's think about how you might put these pieces together to build a remote AWS IoT batch job. The idea is to have a system that automatically collects data, waits until enough data is there, and then processes it all at once. This kind of system works without much human help once it's set up, which is pretty neat. It's like setting up a machine that does its work on a schedule, or when it sees a lot of raw material ready for it.

Triggering the Batch Process

A batch job needs something to tell it when to start. There are a few ways to do this. One common way is to trigger it based on time. For example, you might want to process all the data collected every hour, or every day at midnight. You can use a service like Amazon EventBridge to set up a schedule for this. It's like setting an alarm clock for your data processing, more or less.

Another way to start a batch job is when a certain amount of data has been collected. Maybe you want to process data whenever a new file of a certain size appears in your S3 storage. EventBridge can also help here by reacting to events like "new object created in S3." This means your job only runs when there's actually something big enough to work on, which is quite efficient.

Processing the Data with Serverless Functions

For the actual work of processing the data, AWS Lambda is a really good fit. When your batch job is triggered, Lambda can run a piece of code that reads the collected data from S3. This code can then do whatever calculations or transformations you need. For example, it could:

- Read all the sensor readings from the last hour.

- Calculate the average temperature across all devices.

- Find any readings that are outside a normal range.

- Clean up the data, removing any errors or incomplete entries.

The nice thing about Lambda is that you don't need to worry about servers. AWS handles all that for you. Your code just runs when it's needed, and you only pay for the time it takes to run, which is very helpful for jobs that only happen sometimes, or for a short time. It's like having a helper who only shows up when there's a specific task to do, and then leaves when it's done.

Managing the Job Flow

Sometimes, a batch job isn't just one simple step. It might involve several different actions that need to happen in a specific order. For example, first you might need to prepare the data, then process it, then save the results in a different place. AWS Step Functions is excellent for managing these kinds of multi-step processes. It lets you define a "workflow" where each step is a separate task.

Step Functions can make sure each part of your batch job finishes before the next one starts. If something goes wrong in one step, it can also handle retries or send alerts. This gives you a lot of control and makes your batch jobs more reliable. It's almost like having a detailed checklist and someone making sure each item gets done, and in the right order.

Handling Results and Errors

After your batch job runs, you'll want to do something with the results. This might mean saving the processed data to another S3 bucket, putting it into a database, or even sending an alert if something unusual was found. Your Lambda function can handle saving the results. For example, if you calculated daily averages, you might store those averages in a separate file or table for later viewing. That, is pretty typical.

It's also important to plan for when things don't go as expected. What if a data file is corrupted? What if the processing code runs into an error? AWS services have ways to report these issues. You can set up alerts to notify you if a batch job fails. This way, you can quickly find out about problems and fix them, which is quite important for keeping things running smoothly. You want to know when something goes sideways, you know?

A Simple Scenario for a Remote IoT Batch Job

Let's consider a practical example of a remote AWS IoT batch job. Imagine you have many small weather stations spread across different farms. Each station measures temperature, humidity, and rainfall every 10 minutes. This information is sent to AWS IoT Core. You want to get a daily summary of the weather conditions for each farm, including the average temperature and total rainfall, and then store this summary somewhere for your farmers to see.

Step-by-Step Walkthrough of the Example

- Data Collection: Each remote weather station sends its readings to AWS IoT Core. The messages contain details like the farm ID, device ID, temperature, humidity, rainfall, and the time of the reading.

- Data Storage: An AWS IoT Core rule is set up. This rule tells IoT Core to take all incoming weather readings and save them as small files in an Amazon S3 bucket. The files are organized by date and farm ID, which makes it easier to find them later.

- Batch Job Trigger: An Amazon EventBridge rule is set up to run once every day at midnight, say, for the previous day's data. This rule sends a signal to start the batch processing.

- Data Processing (Lambda): When EventBridge sends its signal, an AWS Lambda function starts running. This Lambda function does the following:

- It looks at the S3 bucket for all the weather data files from the previous day.

- It reads all these files, gathering all the temperature, humidity, and rainfall readings for each farm.

- For each farm, it calculates the average temperature and the total rainfall for that day.

- It then creates a new summary record for each farm, with the farm ID, date, average temperature, and total rainfall.

- Storing Results: The Lambda function then takes these daily summary records and saves them into a different location. This could be another S3 bucket for historical records, or perhaps an Amazon DynamoDB table if you want to quickly look up daily summaries for specific farms.

- Alerts (Optional): If the Lambda function runs into any problems, like not being able to find data for a farm, it can send a notification to you, perhaps through a simple email or text message.

This example shows how a remote IoT batch job can automate the process of turning raw, incoming data into useful summaries. It takes away the need for someone to manually gather and calculate these numbers every day. It's a pretty straightforward process, actually.

Benefits of This Approach

Using this kind of remote AWS IoT batch job has many benefits. For one, it's very efficient. You're not constantly running expensive computing resources. They only get used when a batch of data is ready to be worked on. This can save you money, which is always a good thing. You only pay for what you use, so to speak.

It's also very scalable. If you add more weather stations, the system can handle the increased amount of data without you having to make big changes. AWS services are built to grow with your needs, so you don't have to worry about outgrowing your setup. This is a big plus for businesses that might start small and then grow very quickly.

Moreover, it makes your data more useful. By regularly processing data into summaries, you get insights that are easy to understand and act on. Farmers can quickly see daily weather patterns without having to sort through thousands of individual sensor readings. This means the data becomes a tool for making better decisions, and that is very important.

Finally, it's reliable. AWS services are designed to be highly available, meaning they are almost always working. If one part of the system has a temporary issue, others can often step in. This means your batch jobs are likely to run successfully, day after day, giving you consistent results. This gives you peace of mind, obviously.

Making Your Batch Jobs Better

Once you have a remote AWS IoT batch job set up, there are always ways to make it even better. Thinking about things like how secure your data is, how you keep an eye on the system, and how much it costs can help you get the most out of your setup. These considerations help ensure your system runs well over time, and that is something you want.

Keeping Things Secure

Security is a big deal when you are dealing with data from remote devices. You want to make sure that only authorized devices can send data and that only authorized people or systems can access and process that data. AWS provides many tools to help with this. For example, AWS IoT Core uses strong methods to make sure devices are who they say they are.

You can also control who can access your S3 buckets and Lambda functions using AWS Identity and Access Management (IAM). This lets you set very specific rules about who can do what. It's like having a very strict bouncer for your data, only letting in those with the right credentials. This is, you know, pretty important for privacy and data integrity.

Watching What Happens

It's a good idea to keep an eye on your batch jobs to make sure they are running correctly. AWS CloudWatch is a service that helps you monitor your applications and resources. You can set up CloudWatch to collect logs from your Lambda functions, so you can see what happened during a batch run. You can also create alarms that tell you if a job fails or if it takes too long to complete. This way, you can quickly spot problems and fix them.

Watching your system also helps you understand how much resource it's using. If your Lambda function is suddenly taking much longer to run, or if it's using a lot more memory, CloudWatch can show you this. This information helps you make adjustments to keep your costs down and your system performing well. It's like having a dashboard for your whole operation, basically.

Cost Considerations

One of the nice things about using AWS for remote IoT batch jobs is that you typically only pay for what you use. Services like Lambda and S3 are priced based on actual usage. This means you don't have to buy expensive servers that sit idle most of the time. However, it's still good to keep an eye on your costs.

You can use the AWS Cost Explorer to see how much you are spending on each service. This helps you find areas where you might be able to save money. For instance, if your S3 storage is getting very large, you might look into ways to archive older data to cheaper storage tiers. Being smart about how you use resources can lead to significant savings over time, and that is a pretty good thing for any project.

Frequently Asked Questions About Remote IoT Batch Jobs</

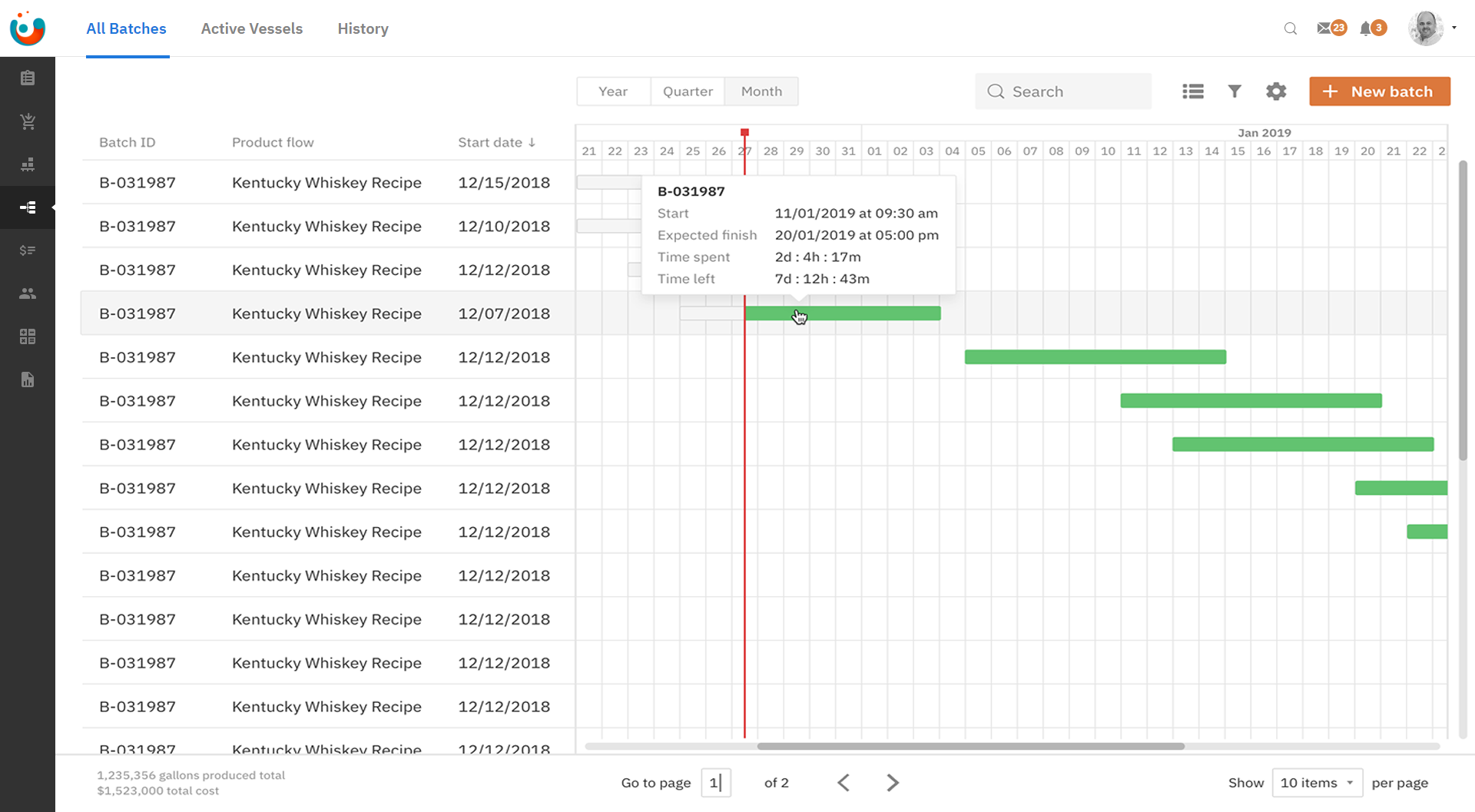

Remoteiot Batch Job Example Remote Aws Developing A Monitoring

Remoteiot Batch Job Example Remote Aws Developing A Monitoring

AWS Batch — Easy and Efficient Batch Computing Capabilities - AWS